EchoSpeech: Continuous Silent Speech Recognition on Minimally-obtrusive Eyewear Powered by Acoustic Sensing

Published in The Proceedings of the CHI Conference on Human Factors in Computing Systems (CHI), 2023

Recommended citation: Ruidong Zhang, Ke Li, Yihong Hao, Yufan Wang, Zhengnan Lai, François Guimbretière, and Cheng Zhang. 2023. EchoSpeech: Continuous Silent Speech Recognition on Minimally-obtrusive Eyewear Powered by Acoustic Sensing. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (CHI). Association for Computing Machinery, New York, NY, USA, Article 852, 1–18. https://doi.org/10.1145/3544548.3580801

Selected Media Coverage: Cornell Chronicle, Engadget

April 23–28, 2023, Hamburg, Germany

Keyword: Silent Speech Recognition, Acoustic Sensing, Smart Glasses

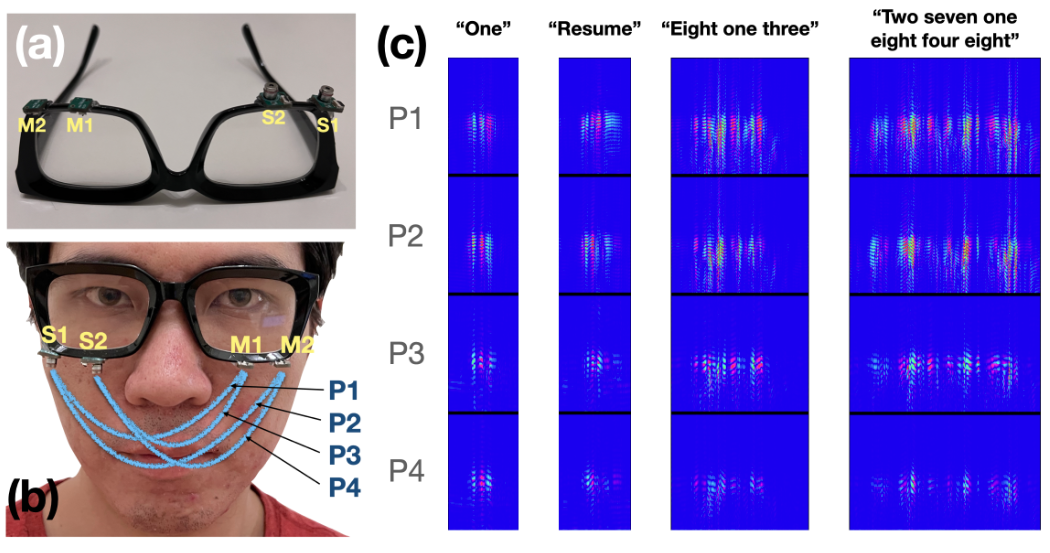

We present EchoSpeech, a minimally-obtrusive silent speech interface (SSI) powered by low-power active acoustic sensing. EchoSpeech uses speakers and microphones mounted on a glass-frame and emits inaudible sound waves towards the skin. By analyzing echos from multiple paths, EchoSpeech captures subtle skin deformations caused by silent utterances and uses them to infer silent speech. With a user study of 12 participants, we demonstrate that EchoSpeech can recognize 31 isolated commands and 3-6 figure connected digits with 4.5% (std 3.5%) and 6.1% (std 4.2%) Word Error Rate (WER), respectively. We further evaluated EchoSpeech under scenarios including walking and noise injection to test its robustness. We then demonstrated using EchoSpeech in demo applications in real-time operating at 73.3mW, where the real-time pipeline was implemented on a smartphone with only 1-6 minutes of training data. We believe that EchoSpeech takes a solid step towards minimally-obtrusive wearable SSI for real-life deployment.