HPSpeech: Silent Speech Interface for Commodity Headphones

Published in The Proceedings of the International Symposium on Wearable Computers (ISWC), 2023

Recommended citation: Ruidong Zhang, Hao Chen, Devansh Agarwal, Richard Jin, Ke Li, François Guimbretière, and Cheng Zhang. 2023. HPSpeech: Silent Speech Interface for Commodity Headphones. In Proceedings of the 2023 ACM International Symposium on Wearable Computers (ISWC). Association for Computing Machinery, New York, NY, USA, 60–65. https://dl.acm.org/doi/10.1145/3594738.3611365

Best Paper Honorable Mention Award

October 8-12, 2023, Cancún, Mexico

Keyword: Silent Speech, Acoustic Sensing, Headphones, Commodity-off-the-shelf (COTS)

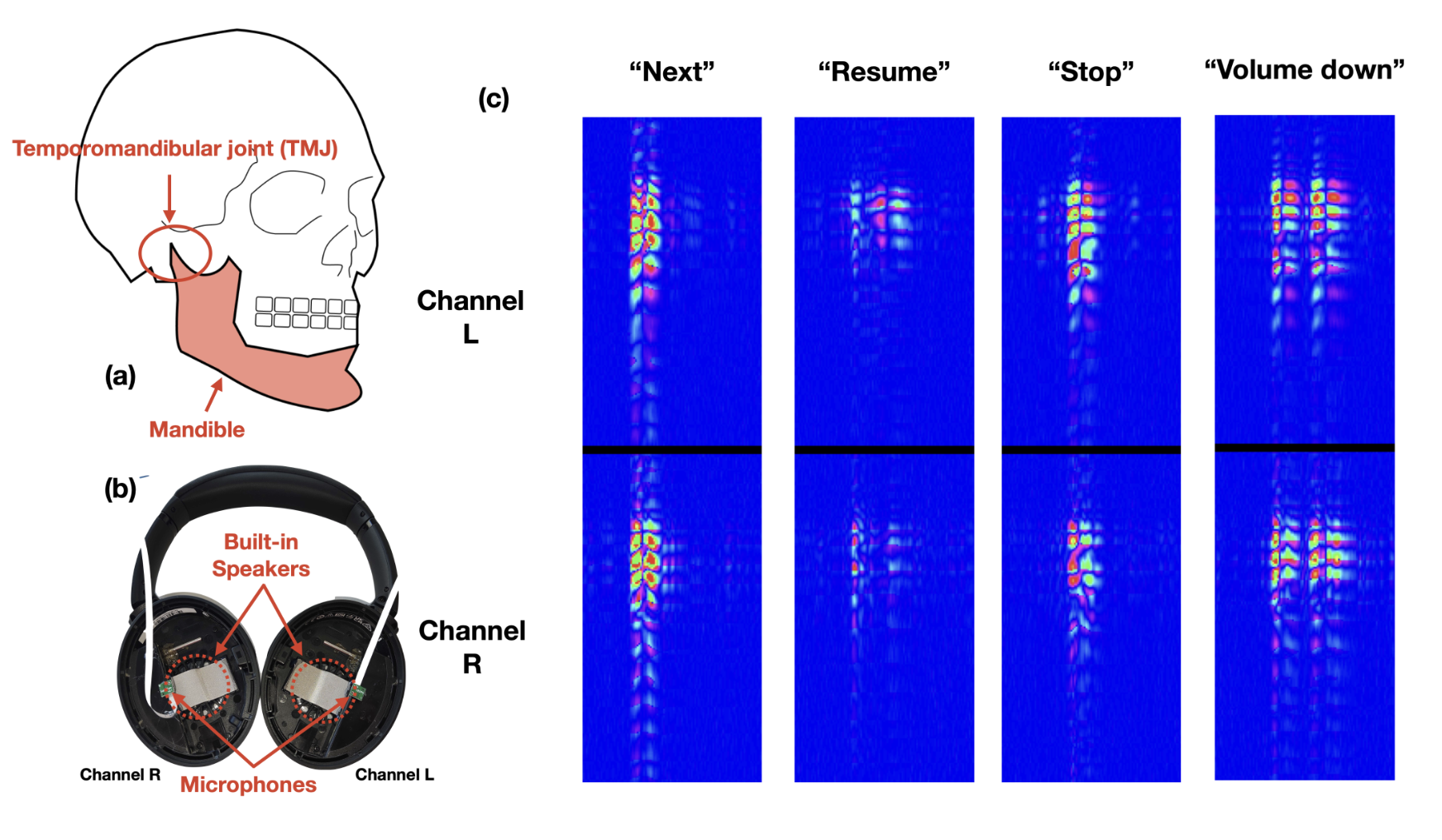

We present HPSpeech, a silent speech interface for commodity headphones. HPSpeech utilizes the existing speakers of the headphones to emit inaudible acoustic signals. The movements of the temporomandibular joint (TMJ) during speech modify the reflection pattern of these signals, which are captured by a microphone positioned inside the headphones. To evaluate the performance of HPSpeech, we tested it on two headphones with a total of 18 participants. The results demonstrated that HPSpeech successfully recognized 8 popular silent speech commands for controlling the music player with an accuracy over 90%. While our tests use modified commodity hardware (both with and without active noise cancellation), our results show that sensing the movement of the TMJ could be as simple as a firmware update for ANC headsets which already include a microphone inside the hear cup. This leaves us to believe that this technique has great potential for rapid deployment in the near future. We further discuss the challenges that need to be addressed before deploying HPSpeech at scale.