EchoNose: Sensing Mouth, Breathing and Tongue Gestures inside Oral Cavity using a Non-contact Nose Interface

Published in The Proceedings of the International Symposium on Wearable Computers (ISWC), 2023

Recommended citation: Rujia Sun, Xiaohe Zhou, Benjamin Steeper, Ruidong Zhang, Sicheng Yin, Ke Li, Shengzhang Wu, Sam Tilsen, Francois Guimbretiere, and Cheng Zhang. 2023. EchoNose: Sensing Mouth, Breathing and Tongue Gestures inside Oral Cavity using a Non-contact Nose Interface. In Proceedings of the 2023 ACM International Symposium on Wearable Computers (ISWC). Association for Computing Machinery, New York, NY, USA, 22–26. https://dl.acm.org/doi/10.1145/3594738.3611358

October 8-12, 2023, Cancún, Mexico

Keyword: Nose Interface, Tongue Gestures, Breathing Patterns, Silent Speech, Acoustic Sensing

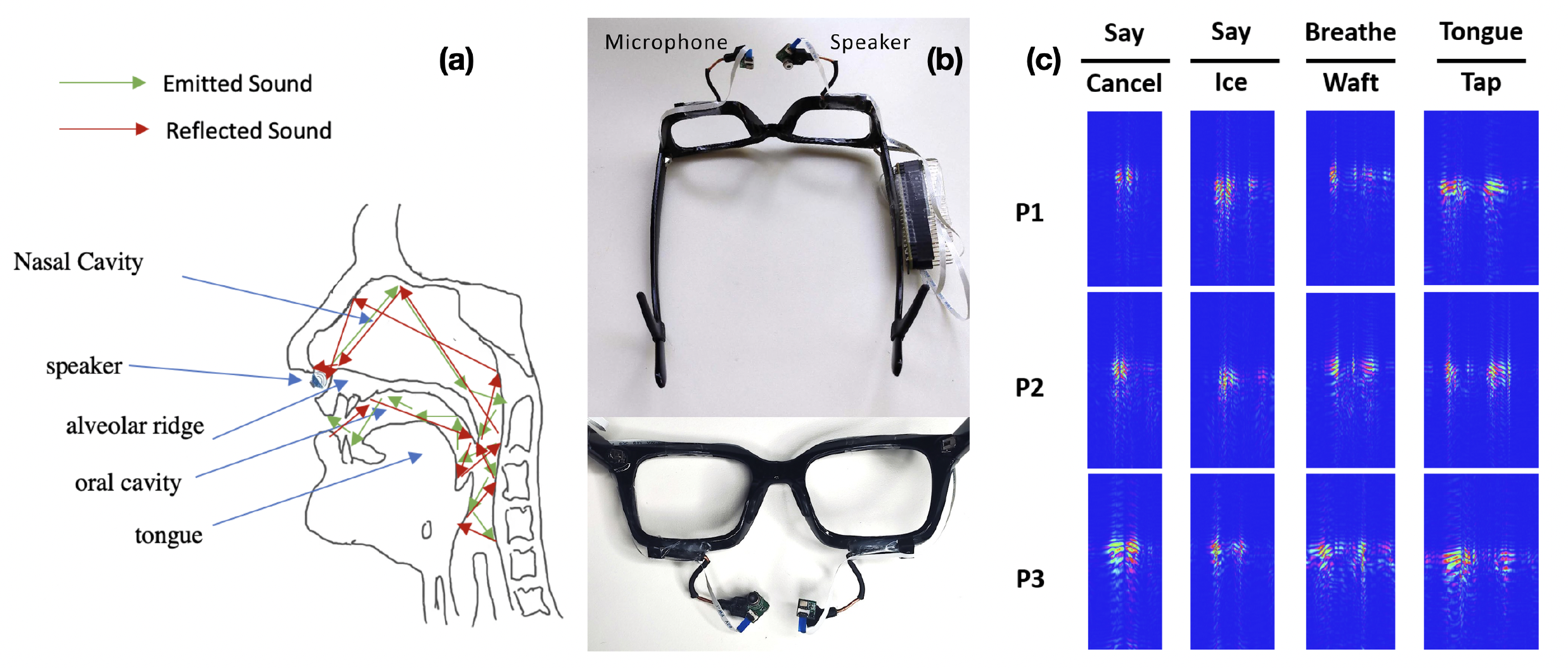

Sensing movements and gestures inside the oral cavity has been a long-standing challenge for the wearable research community. This paper introduces EchoNose, a novel nose interface that explores a unique sensing approach to recognize gestures related to mouth, breathing, and tongue by analyzing the acoustic signal reflections inside the nasal and oral cavities. The interface incorporates a speaker and a microphone placed at the nostrils, emitting inaudible acoustic signals and capturing the corresponding reflections. These received signals were processed using a customized data processing and machine learning pipeline, enabling the distinction of 16 gestures involving speech, tongue, and breathing. A user study with 10 participants demonstrates that EchoNose achieves an average accuracy of 93.7% in recognizing these 16 gestures. Based on these promising results, we discuss the potential opportunities and challenges associated with applying this innovative nose interface in various future applications.